![]() This post is older than a year. Consider some information might not be accurate anymore.

This post is older than a year. Consider some information might not be accurate anymore. ![]()

Used: elasticsearch v2.4.6 kibana v4.6.6 docker-compose v1.23.2

Compose is a tool for defining and running multi-container Docker applications. With Compose, you define the services that need to run in a YAML file. Then bring up the services by using the docker-compose command.

This post provides starter recommendations for Docker Compose. I use a support case scenario with Elasticsearch to demonstrate the usage. Additionally, I elaborate about how to get started with the latest Elasticsearch and Kibana for local development and experiments.

Advantages

- You describe the multi-container setup in a clear manifest and bring up the containers in a single command.

- You can define the priority and dependency of the container to other containers.

- It is excellent for starting development and testing environments.

- You can ramp up legacy environments without polluting your host or client system.

YAML file

The default filename is docker-compose.yml. You can name the manifest name as you like, but you have to pass the filename in the docker-compose command.

For instance legacy-elasticsearch.yml:

docker-compose -f legacy-elasticsearch.yml up

Environment file

For a customer, I have to use the following versions:

- Elasticsearch 2.4.6

- Kibana 4.6.6

Compose supports declaring default environment variables in an environment file named .env placed in the folder where the docker-compose command runs.

Define .env like a properties file:

ELASTIC_VERSION=2.4-alpine

KIBANA_VERSION=4.6.6

Noteworthy to mention that v2.4.6 is EOL (End of Life) since 2018-02-28. You can find respective information in the Elastic EOL Support page. The Kibana compatibility is listed in the Support Matrix.

Basic Docker Manifest

In the Docker Manifest docker-compose.yml we declare our containers under services. The compose reference version is mandatory. I use the latest version 3.7, adjust it to your needs.

- The first container is elasticsearch and named

es01. - The second container is kibana and named

ki01. It depends on the first container. - The application version uses the environment variable of

.env. - We use the custom docker network

esnetfor both containers. - The Elasticsearch data is stored on the named volume

data. - The default port for Elasticsearch is

9200. - Kibana uses the service name

es01for accessing Elasticsearch. - The default port for Kibana is

5601.

version: '3.7'

services:

es01:

image: elasticsearch:${ELASTIC_VERSION}

container_name: elasticsearch

environment:

- cluster.name=cinhtau

- bootstrap.memory_lock=true

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- "data:/usr/share/elasticsearch/data"

ports:

- 9200:9200

networks:

- esnet

ki01:

image: kibana:${KIBANA_VERSION}

container_name: kibana

hostname: kibana

ports: ['5601:5601']

networks: ['esnet']

depends_on: ['es01']

restart: on-failure

environment:

- ELASTICSEARCH_URL=http://es01:9200

volumes:

data:

networks:

esnet:

To validate and view the Compose file use:

docker-compose config

The YAML output is sorted alphabetically!

Start Containers

To start the container stack, we use:

docker-compose up

Above command produces for instance following log output:

docker-compose up

Creating elasticsearch ... done

Creating kibana ... done

Attaching to elasticsearch, kibana

..

elasticsearch | [2019-01-07 16:26:35,265][INFO ][node ]

[Clive] version[2.4.6], pid[1], build[5376dca/2017-07-18T12:17:44Z]

..

kibana | {"type":"log","@timestamp":"2019-01-07T16:26:36Z","tags":["listening","info"],

"pid":15,"message":"Server running at http://0.0.0.0:5601"}

elasticsearch | [2019-01-07 16:26:38,875][INFO ][node ] [Clive] initialized

elasticsearch | [2019-01-07 16:26:38,875][INFO ][node ] [Clive] starting ...

elasticsearch | [2019-01-07 16:26:39,044][INFO ][transport ]

[Clive] publish_address {172.22.0.2:9300}, bound_addresses {0.0.0.0:9300}

..

elasticsearch | [2019-01-07 16:26:49,981][INFO ][cluster.routing.allocation]

[Clive] Cluster health status changed from [RED] to [YELLOW] (reason: [shards started [[.kibana][0]] ...]).

kibana | {"type":"log","@timestamp":"2019-01-07T16:26:52Z","tags":["status","plugin:elasticsearch@1.0.0","info"],

"pid":15,"state":"green","message":"Status changed from yellow to green - Kibana index ready",

"prevState":"yellow","prevMsg":"No existing Kibana index found"}

You can also start the containers in detached mode.

docker-compose up -d

View the status of the containers (truncated example).

docker container ls

CONTAINER ID IMAGE COMMAND PORTS NAMES

e08b58cce7a2 kibana:4.6.6 "/docker-entrypoint.…" 0.0.0.0:5601->5601/tcp kibana

310ca00501c9 elasticsearch:2.4-alpine "/docker-entrypoint.…" 0.0.0.0:9200->9200/tcp, 9300/tcp elasticsearch

To view, the container logs use the container name or id, e.g. docker logs elasticsearch.

Stop Containers

If you want to stop the containers use

docker-compose stop

To stop and remove containers, networks, images, and volumes use:

docker-compose down

This outputs:

Stopping kibana ... done

Stopping elasticsearch ... done

Removing kibana ... done

Removing elasticsearch ... done

Removing network es2_esnet

There are more options for docker-compose. To view the help:

docker-compose --help

Customize Builds

In the previous section, we use docker containers from public images. We are going to customise docker images.

- Elasticsearch: We add the

curlapplication to the docker image for the health check. - Kibana: We add

curlto the docker image and install the Kibana Sense plugin (Web Console).

Therefore we create separate Dockerfile’s. A Dockerfile is a text file that describes the steps that Docker needs to take to prepare an image - including installing packages, creating directories, and defining environment variables, among other things.

Elasticsearch

For Elasticsearch we use the elasticsearch/Dockerfile:

ARG VERSION

FROM elasticsearch:$VERSION

MAINTAINER me@cinhtau.net

RUN apk update && apk add curl

The base image of Elasticsearch is Linux Alpine, and we install with apk the curl application.

Kibana

For Kibana we use the kibana/Dockerfile:

ARG VERSION

FROM kibana:$VERSION

MAINTAINER me@cinhtau.net

RUN apt-get update && apt-get install curl -y && apt-get clean && /opt/kibana/bin/kibana plugin --install elastic/sense

The base image of Kibana is Debian (Jessie), and we install with apt the curl application and the Kibana Sense plugin.

Build Section

In the docker-compose.yml file, we define now the build sections. Furthermore, we add the health-checks for respective containers.

version: '3.7'

services:

es01:

build:

context: elasticsearch

args:

VERSION: "${ELASTIC_VERSION}"

image: my-elasticsearch:${ELASTIC_VERSION}

container_name: elasticsearch

environment:

- cluster.name=cinhtau

- bootstrap.memory_lock=true

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- "data:/usr/share/elasticsearch/data"

ports:

- 9200:9200

networks:

- esnet

healthcheck:

test: ["CMD", "curl", "-f", "http://0.0.0.0:9200"]

interval: 30s

timeout: 10s

retries: 5

ki01:

build:

context: kibana

args:

VERSION: "${KIBANA_VERSION}"

image: my-kibana:${KIBANA_VERSION}

container_name: kibana

hostname: kibana

ports: ['5601:5601']

networks: ['esnet']

depends_on: ['es01']

restart: on-failure

environment:

- ELASTICSEARCH_URL=http://es01:9200

healthcheck:

test: ["CMD", "curl", "-s", "-f", "http://localhost:5601"]

retries: 6

volumes:

data:

networks:

esnet:

By providing the context, docker-compose knows which Dockerfile to use for the docker build. We pass the version number as an argument for the build, as needed in the Dockerfile. To build the containers, we can do

docker-compose build

alternatively, use up, that also build, create and start containers.

docker-compose up -d

Container Healthcheck

The defined healthcheck is for the status. If you do docker-compose up, docker ps will give you:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d08ef20c64da my-kibana:4.6.6 "/docker-entrypoint.…" 2 seconds ago Up 1 second (health: starting) 0.0.0.0:5601->5601/tcp kibana

ba412a4b872a my-elasticsearch:2.4-alpine "/docker-entrypoint.…" 3 seconds ago Up 2 seconds (health: starting) 0.0.0.0:9200->9200/tcp, 9300/tcp elasticsearch

After the completion of the containers start phase, it gives you:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9ceba48d1b75 my-kibana:4.6.6 "/docker-entrypoint.…" About a minute ago Up About a minute (healthy) 0.0.0.0:5601->5601/tcp kibana

66f19678d820 my-elasticsearch:2.4-alpine "/docker-entrypoint.…" About a minute ago Up About a minute (healthy) 0.0.0.0:9200->9200/tcp, 9300/tcp elasticsearch

The health check instruction tells Docker how to test a container to check that it is still working. This health check can detect cases such as Kibana is stuck in the UI optimisation and yet not ready to serve.

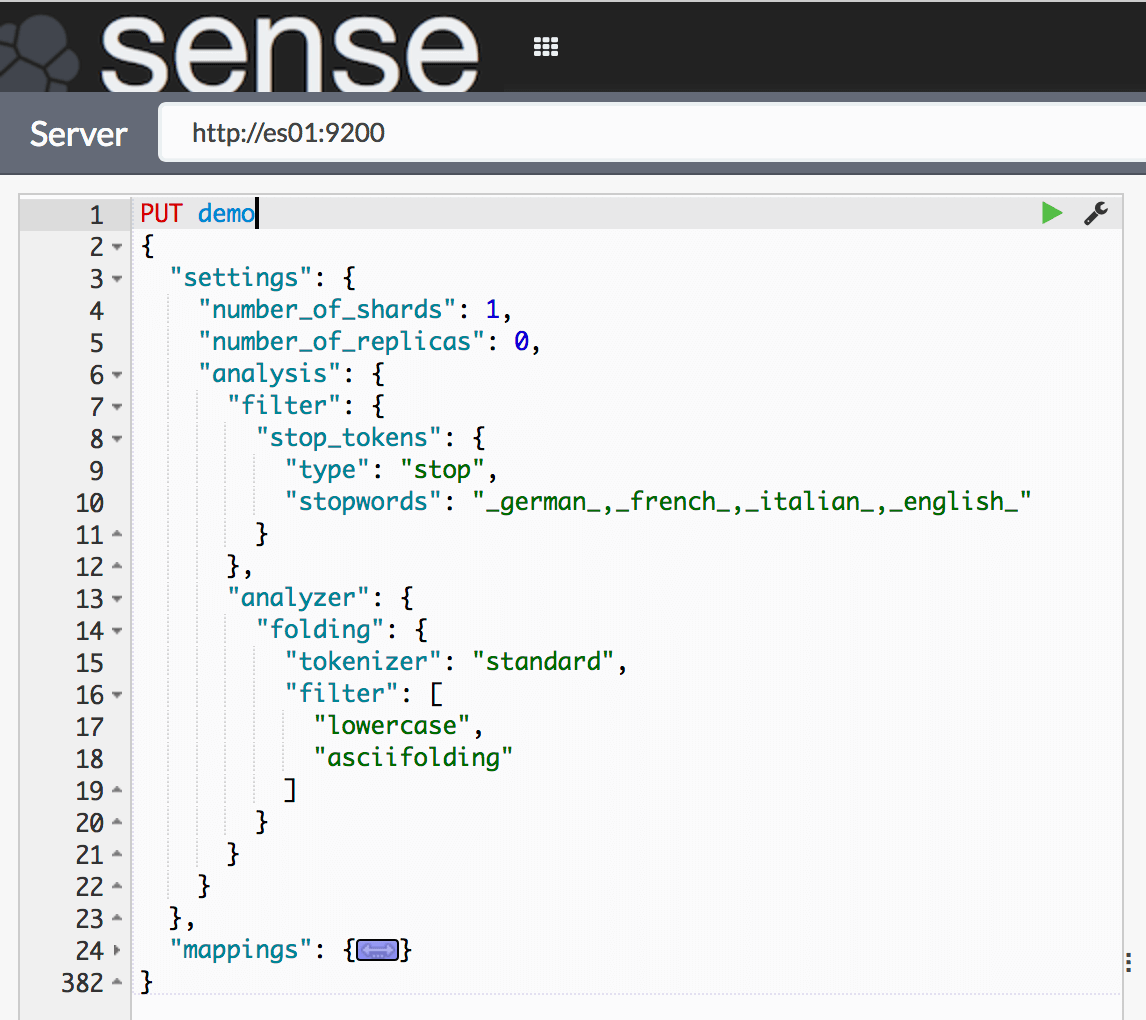

Kibana Sense

Since Kibana accesses Elasticsearch as es01 change the Sense URL from http://localhost:9200 to http://es01:9200.

Latest Versions

To use a more recent version of Elasticsearch and Kibana, I create the .env file in a separate folder.

ELASTIC_VERSION=6.5.4

SECURITY_ENABLED=false

MONITORING_ENABLED=false

Since version 5 all elastic products have the same version. Therefore we use the variable ELASTIC_VERSION. Since version 6, the X-Pack extensions are available. To enable or disable X-Pack Security and Monitoring features we use the variables SECURITY_ENABLED and MONITORING_ENABLED.

I create the docker-compose manifest file elastic-single-node.yml, that uses the above variables. Elastic provides the docker images from their docker registry.

version: '3.7'

services:

es01:

image: docker.elastic.co/elasticsearch/elasticsearch:${ELASTIC_VERSION}

container_name: elasticsearch

environment:

- cluster.name=cinhtau

- bootstrap.memory_lock=true

- node.name=stretch_armstrong

- xpack.license.self_generated.type=trial

- xpack.security.enabled=${SECURITY_ENABLED}

- xpack.monitoring.enabled=${MONITORING_ENABLED}

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- "es-data:/usr/share/elasticsearch/data"

ports:

- 9200:9200

networks:

- esnet

ki01:

image: docker.elastic.co/kibana/kibana:${ELASTIC_VERSION}

container_name: kibana

hostname: kibana

ports: ['5601:5601']

networks: ['esnet']

depends_on: ['es01']

restart: on-failure

environment:

- XPACK_MONITORING_ENABLED=${MONITORING_ENABLED}

- ELASTICSEARCH_URL=http://es01:9200

volumes:

es-data:

networks:

esnet:

To start and try out the newest Elasticsearch and Kibana features start with:

docker-compose -f elastic-single-node.yml up

# docker-compose -f elastic-single-node.yml down

Summary

- Docker compose is a useful tool to manage container stacks for your client.

- For deployment scenarios on distributed servers, there are other eligible tools like Docker Swarm, Kubernetes or Ansible.

- Manage all related containers with one single command.

- Find the example files in this public GitHub repository.