![]() This post is older than a year. Consider some information might not be accurate anymore.

This post is older than a year. Consider some information might not be accurate anymore. ![]()

Used: elasticsearch v6.2.4

Tuning Elasticsearch is an advanced topic, that attending in an Elasticsearch Engineer Training by elastic is pretty simple to understand. The usual answer is always: It depends. I summarise my notes and findings on this. Consider there is always a lot more to it. The training covers it all. It is undoubtedly worth to attend. Education is important ;-). Roughly it is about Elasticsearch Internals (Apache Lucene under the hood), Capacity Planning and smart choices/anticipation.

What are the key points to consider?

- How many data nodes you have? Assume we have 3 data nodes.

- How many primary shards does your index have?

- How many replicas (replica shards) do you have?

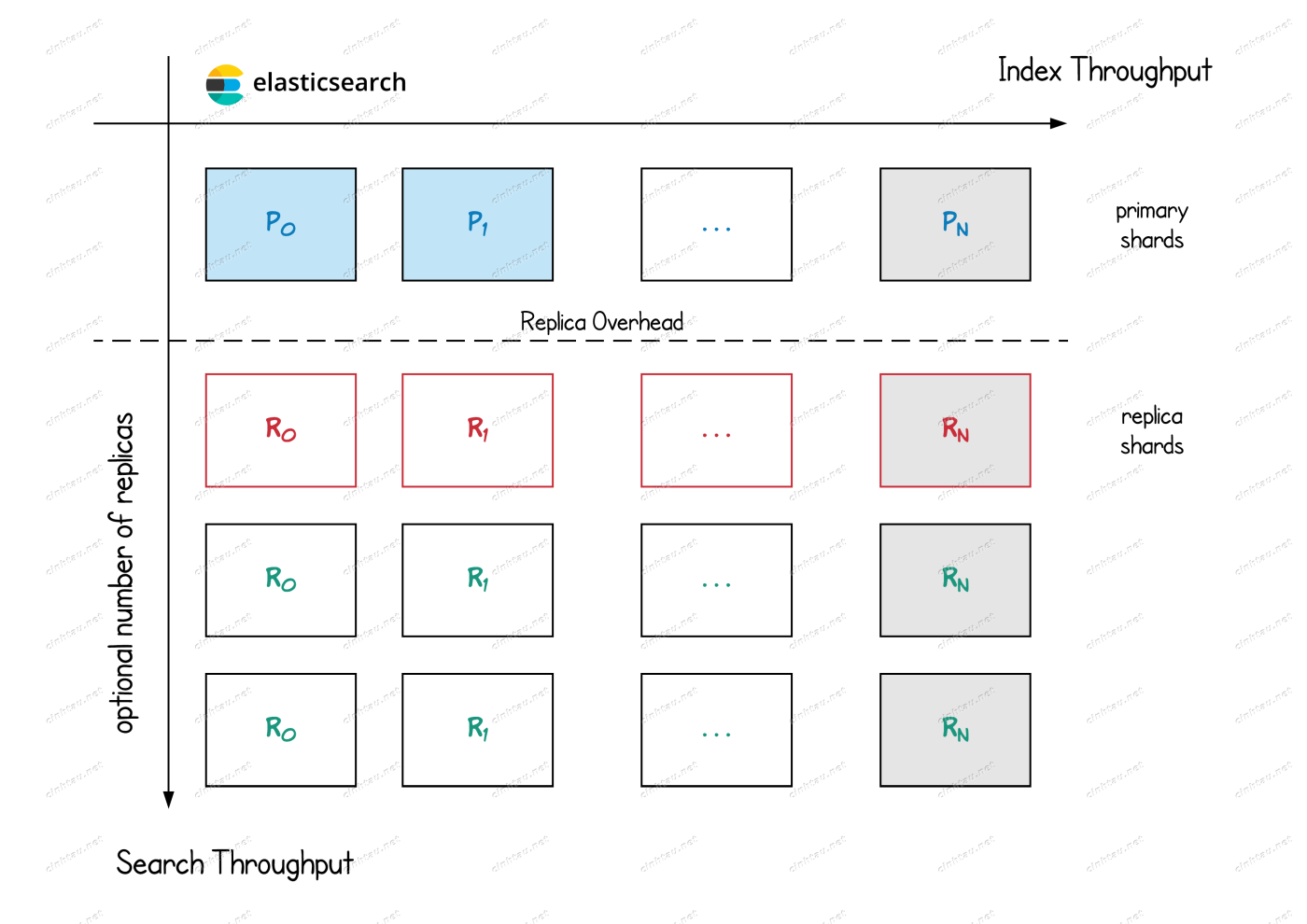

Following graphic illustrates the mechanics.

Sharding Concept

An index may consist of one or more shards. Sharding is vital for two primary reasons:

- It allows you to split/scale your content volume horizontally over several data nodes

- It allows you to distribute and parallelise operations across shards (potentially on multiple nodes) thus increasing performance/throughput

See Basic Concepts

How many shards an index must have, often derived from these points.

- An ideal size for a shard goes up to 40 GB and less than 50 GB, just an orientation figure

- A little bit overallocation is not bad, i.e. having 3 data nodes, and a default size of 5 shards for an index results in 2 data nodes with 2 shards of the index

- Adding more data nodes, allocate the extra shards to the joined nodes

- keep in mind to scale out horizontally to avoid cluster stress or hotspot situations

-

Kagillion Shards is the overallocation situation

- If many shards have to compete for the same resources on the same machine, your overall performance decreases

- Scaling out should be done in phases. Build in enough capacity to get to the next phase.

- Are 3 shards for 3 data nodes ok?

- Adding data nodes, neither reduces the workload or ease a hotspot situation.

- You lose the advantage of scaling out in this scenario, but it may work fine until it gets problematic.

Primary Shards

Having more primary shards does not make the individual indexing operation faster, but it increases indexing throughput.

More primary shards increase indexing throughput, but it also slows down individual search requests since the search results must fetch from more shards.

Replica Shards

The graphic shows primary and replica shards.

- A replica is a copy of a primary shard.

- It provides redundancy, in case of a fail, the replica becomes the primary shard.

- This is a failover for a high available scenario.

- Elasticsearch tries to create a replica of the new primary.

- Self-contingency is a significant strength of Elasticsearch.

- Having one replica cause management overhead.

- The overhead is the same for 1 replica or n replicas.

At index time, a replica shard does the same amount of work as the primary shard. New documents are first indexed on the primary and then on any replicas.

See also Replica Shards

Replica Impacts

- Regarding Tuning Indexing Speed, setting the number of replicas to

0and disabling the refresh interval for the initial load, increases the indexing speed significantly. - Regarding Tuning Search Speed, replicas can help with search traffic throughput but not always.

- Replica shards also uses resources, choose a smart a trade-off between throughput and availability

More replicas result in a better search throughput, but it slows down the individual indexing request.

The first replica is “the most expensive” one, as it nearly doubles the indexing request time, but at the same time, it also adds the most value as you also get data redundancy with the very first replica.

Recommendation

- Setting the numbers of replicas to 2 for an index is highly recommended.

- This results in 3 shards (primary + 2 replicas). The number of 3 primary shards for an index in total, results in 9 shards.

- The scenario that you lose one shard is high, 2 unlikely, but 3 is rare.

- Having one replica is acceptable, but the management overhead is the same as for n replicas.

- In this case, you save disk space and have less resource consumption, but therefore a higher risk on availability.

- Having 1 replica is the minimum requirement for safety

- If safety is not an issue, disable replicas and gain better performance.

Cluster Management

Shards also have an impact on the Cluster Management. The elected master manages the cluster.

- Every shard has initial cost regardless of how much data it contains

- Every shard is managed in the cluster state

- More shards ⇒ larger cluster state ⇒ more work for the master node ⇒ more network crosstalk’s

While some over allocation is useful for capacity planning, too much ends up with the Kagillion Shards Problem, where the stress negates any benefit of increased write capacity on the cluster over managing far more shards than necessary.

Summary

- Elasticsearch Tuning is a challenging duty.

- Gather the cluster and index details to analyse.

- Determine the right number of shards for an index.

- Anticipate scale-out scenarios.

- Replicas are for availability and throughput.

- Choose a wise trade-off between required performance and stability.

Overall this is only a tiny fragment or aspect of the Elasticsearch Engineer Training or how to tune an Elasticsearch cluster. If you got the chance to attend to those training, take it. Even if you should know most stuff by experience, discussing with Elasticsearch Engineers gives you valuable experience and insights. I wrote this article with the feedback from Dan Palmer-Bancel and Daniel Schneiter. Check for the next training opportunities on the Elastic Training Schedule.